test post using Flock beta

The failure of RSS readers

- Only info junkies want more content

- Most people will only track a limited set of news sources, probably no more than 5

- RSS adoption is pretty darn slow compared to other media technologies in the past

- Nobody knows what RSS is...or cares once they do know

- The RSS readers like Bloglines and NewsGator still don’t have mass appeal

- Mr. Joe Average won’t ever see the value in consuming information this way

I typically argue that these problems are a function of the reader tools which are incapable of serving both info junkies and the other 95% of the world simultaneously. But I also have a hard time seeing what kind of functionality Bloglines could build to make it an appealing tool for people like my mother. She does not want a list of feeds to monitor. The My Yahoo! environment is more than enough for her already. And if Bloglines looks more like a dashboard, then it will lose its core user base who wants to manage lots of feeds.

Volume isn’t the problem. The problem is about design, utility, and quality. It’s also about the distribution model.

Digital music adoption should be an indication of what could happen with the right pieces in place. According to a recent report at Macworld, "Consumers will buy more than 104 million hard drive and flash-based digital music players by 2009, up from 27.8 million in 2004." The devices are solid. The content suits the tools. The distribution and revenue streams are tied together effectively. And the software design lowers the barrier for consumption. Volume is picking up because the model is complete.

"The International Federation of Phonographic Industries said that 180 million single tracks were downloaded legally in the first six months of [2005], compared to 57 million tracks in the first half of 2004 and 157 million for the whole of last year."

The real qualitative impact of RSS on the wider public will happen when the important individual bits of data (movie recommendations from my friends, events happening in my neighborhood, deals on airfare for the places I want to go, etc.) are delivered to people more efficiently. Something needs to serve people the right content in the right format on the right device at the right time. The consumer shouldn’t search for that data. The data should find us.

RSS is the right protocol, but the tools that deliver and present feed items should be smarter. They need to know the types of things that people want to receive and parameters for evolving what is delivered based on user input. Does that come in the form of attention data? Does it come in the form of a new tool design of some sort? Is it a reader that morphs as it gets used more and more?

Personally, I’m a big Bloglines fan. It helps me on many different levels. I know I’m not alone, but I also know that we’re in the minority. Let’s face it...your mother isn’t going to change her home page to Bloglines any time soon. And the cute Rojo icons don’t make it any more interesting to someone who has no idea why reading this way could be better than reading a newspaper.

I agree with the arguments that the wider public might not collect volumes of feed sources to track. But I’m sure people will consume more content via RSS if the right content is delivered in the right format in the right place at the right time. I’m also sure that neither the tri-pane reader nor the AJAX dashboard is going to be the catchall solution for RSS consumption. RSS will be served in many forms.

How changes in supply and demand for important content made RSS so relevant

The period from about 1995 to 1997 was all about browsing. The information that was not only relevant to me but also important to me as an information consumer was pretty limited. Even though there were millions of pages to see, I was able to find the ones that I actually really needed by typing in the domain of the source that I required.

Enough material formed online by the late 1990’s to necessitate aggregate views of things for the information consumer. The ratio of unusable crap to important information was pretty bad. So, portals were created to help me navigate to things that mattered to me.

By 2000, there was so much content on the Internet that the portals were unable to provide a comprehensive view of everything that might matter to me. Massive indexing efforts became very important. The ratio of crap to value was still bad, but the universe of valuable information reached new levels. There was something for everyone out there. It seemed that if you had any question or need that there was a solution somewhere on the Internet. The demand for quicker access to the important things put the search engines in a very powerful position to mediate the information consumption transaction.

In economic terms, the supply of important information to any one individual was great enough to warrant a price for access to it. That price was the quest itself. Search engines were able to locate the needle in the haystack but not without a lot of coaxing from the seeker, an acceptable pain point given the demand for the result.

Now we’ve come to a point where the supply of valuable information has overcome the demand. There is relevant stuff out there for every moment of the day. And the competition for my attention as an information consumer is getting tougher all the time. There are multiple sources for weather data, stocks, what to buy in any given situation from whom and for how much, how to get places, people’s opinions on everything, research on anything, etc. A moment in your day doesn’t pass without an information source being readily available to serve you.

The new era is about streamlining information sources for consumers. It’s not that demand for relevant and personalized information fell. I believe the opposite is true. But the supply has increased even faster, putting the information consumer in a power position.

It’s no surprise then that people jumped to RSS to control information flow. We are telling the creators of information that we want filters, we want flow control, and we want those controls in our own hands. It’s the era of syndication and subscriptions. I’ll tell you what information I want, and then you come find me with the right data in the right place at the right time.

This fundamental change in the relationship between information consumers and publishers could play out in any number of ways. The publishers are going to have to work harder to make their data easily consumable and available in different ways. That means microformats, open databases, RSS, APIs, etc. The good news is that I think people will be forthcoming with their needs. They will show in their behavior and by the explicit relationships they create what kinds of things they want and when they want them. The trick is to listen carefully and to answer swiftly.

Battelle makes it to WSJ bestsellers list

Just started reading it this morning, and I really like the framework you use for the book in the "database of intentions". Though I find that a very centralized view of the world and the Internet. I'm wondering if the distributed attention happening outside of search is something you get into. That's the market the browsers could leverage if they were smart and then possibly trump Google with an even richer knowledge pool. Looking forward to the rest of it.

A few random reflections on my birthday

- Everywhere I look I see suspicious motivations. My psyche has been larrydavid-ified...and I kind of like it.

- Being 35 doesn't feel old really. Barry Bonds is cranking out homers still and he's 41. Does BALCO offer an anti-aging pill?

- It's harder to lose weight at 35. Is that because the fat cells are slower to melt away or because chocolate chip cookies taste better than they used to?

- I miss being a smoker.

- I'm starting to freak out about earthquakes, but I should probably be freaking out about avian flu, too. Mix in terrorism, poverty and pedophilia and you start wondering why we bring kids into this world at all.

- You can be old and wear cool shades, but I'm not sure that's also true about shoes. Do you cross that line in your 30's or 40's?

- Nerdiness doesn't age well. Unless you're rich. I can't change the first part. Ideas on changing the second?

- Top 5 favorite audio discoveries in the last year:

- Top 5 video:

- I HEART Huckabees

- Curb Your Enthusiasm (an ongoing favorite)

- The Office (in heavy rotation on my DVD player even still)

- Eternal Sunshine of the Spotless Mind

- Netflix shared lists

- Top 5 technologies:

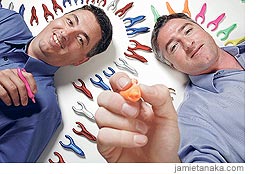

Entrepreneur makes it big: the PenAgain story hits the WSJ

After seeing so many bad business ideas get floated and greedy MBAs cashing out for big bucks during the boom, it's refreshing to see some ingenuity get rewarded the good old fashioned way...through hard work and perseverence.

"Mr. Roche's path to [Wal-Mart], began in 1987 during a Saturday detention at his Palo Alto,

Calif., high school. On lunch break, Mr. Roche wandered into a flea

market where he purchased a toy robot that, when twisted a certain way,

doubled as a pen. While fiddling with the robot and a lighter, he

burned the writing tip off one leg and then reattached it to the

robot's head. Writing in that position, with one index finger between

the robot's legs, he found he didn't need to grip so tightly because

the design supported the natural weight of his hand. "I'd always had

horrible writer's cramp, and this helped," he says.

"Mr. Roche's path to [Wal-Mart], began in 1987 during a Saturday detention at his Palo Alto,

Calif., high school. On lunch break, Mr. Roche wandered into a flea

market where he purchased a toy robot that, when twisted a certain way,

doubled as a pen. While fiddling with the robot and a lighter, he

burned the writing tip off one leg and then reattached it to the

robot's head. Writing in that position, with one index finger between

the robot's legs, he found he didn't need to grip so tightly because

the design supported the natural weight of his hand. "I'd always had

horrible writer's cramp, and this helped," he says.

Not long afterward, Mr. Roche began fiddling in his garage with other pens, melting them down and shaping them into V's. He recalls telling his father: "I have this idea to reinvent the pen."

Through college, he continued thinking about his invention, choosing its name after a friend jarred him from a daydream -- "I was just thinking about that pen again," Mr. Roche told his pal. In June 2001, he teamed up with Mr. Ronsse, a former fraternity brother turned engineer, and the two began plotting development of the PenAgain, which they envisioned as a futuristic wishbone design modeled after the robot. Putting in $5,000 each, they launched Pacific Writing Instruments in December 2001, filed for patent approval, launched a Web site (www.penagain.com) and set up production in the Bay area."

Search: Big indexes versus microformats

In my mind, the end game is not about whether or not the search results page is good enough. Search is just one piece of the process, the negotiation. The transaction that ultimately matters to me is discovery. Indexing enables some very useful tools for broad searching across really wide data sets to narrow results to something digestible. And that story is really important in the evolution of the Internet. But there’s a new approach to finding things happening right now that may be more profound.

Soon search is going to be about the explicit relationships between people and the discovery mechanisms they create directly between each other. People who think like me, who have solved the same problems I have, who know people who can help me are going to provide a direct route to precisely the things that matter to me. I don’t have to negotiate with a machine when I can use the knowledge pool of my peers to locate exactly what I need when I need it.

This change became more obvious to me when tags came along. Tags are labels people add to pages. They are explicit organizing principles applied to things of interest. My connection to people and the tags that they use to track things of interest to them makes it possible to discover things that also matter to me. I don’t even have to ask my friends for their expertise...they are leaving breadcrumbs to their minds all over the Internet.

When I look in MyWeb, I see things that I need to read. They are items that people in my small network have saved and tagged. My explicit relationship to them makes the items more relevant than the results of a wide net cast over a huge data set that gives me data in a just-in-time kind of way...a very 1990’s way. For example, MyWeb shows me things that people who are living in a Web2.0 world discover about XML. That helps me stay in tune with the implications of XML in a new media environment in a far more precise and relevant way than any search result I get from a search engine on "XML". Further, I won’t likely check out music that is interesting to people who find XML fascinating because I have a different network of friends serving that need for me…again, not something I get from big indexes in search engines. You can’t say to Google, "show me new music that I might like".

But tags are just the beginning of this breakthrough in precise discovery. Microformats are going to make it possible to mashup global data in a very local way. When people begin marking more things they interact with using explicit descriptive data (events, prices, ratings, playlists, etc.), then tools will evolve that give me the things that matter to me via the people that matter to me in the context that matters at the moment.

The hard part is making a fluid connection from a thing to a person to me. It must take no more than 2 seconds for a person to add microformat data. And there must be an immediate and beneficial gain upon completing the additional markup or they won’t do it.

It would be a mistake to assume that people will tag or add microformat data because they can. People will do it because it’s easy and because there’s an intrinsic incentive. When you rate something in the Yahoo! Music Engine, you get the sense that the system will offer related music that you like. When you tag things in MyWeb, your search results get more precise. When you post a change in Wikipedia, your post appears immediately on the live site. The more you interact with these tools, the smarter they become. That is what will differentiate the successful search tools in the next generation.

Chris Tolles of Topix.net likes to dispel the myths of tagging as a former member of the Open Directory Project. He has a man vs machine view where machines are more likely to win the game of finding things:

"If I had a nickel for every starry eyed idealist point to tagging saving the world, I'd be able to fund my own blog search engine."

But the economics of participatory media are starting to form some tangible results. Umair Haque at Bubblegeneration explains the model:

"Web 2.0 is about the shift from network search economies, which realize mild exponential gains - your utility is bounded by the number of things (people, etc) you can find on the network - to network coordination economies, which realize combinatorial gains: your utility is bounded by the number of things (transactions, etc) you can do on the network."

It’s not man versus machine. It’s man helping machine to help man.

YAR (yet another reader)

The project comes from Matt Kaufman. Looks interesting.

A Web 2.0 business model for publishers

I think there's a model that is worth considering for any publisher, large or small, that will help them get into the game. Every publisher has 2 primary assets: audience and content. The more valuable of the two is the audience, so you have to be more careful with that piece. But the content is something you can exploit in this new world in some interesting ways that shouldn't be too scary.

If you look at the core of your content, the thing that differentiates you from everyone else is the data you have on the things that you cover. Columns should be used in a more blog-like environment to engage your audience. News is probably more useful as a net for catching new fish. The data that builds on the subjects that you cover over time, however, becomes something that the Web 2.0 world can use.

IDG, my former employer, has a very deep set of data on computers and software across its publications. Primedia knows a ton about cars. General interest newspapers know a lot about local businesses and local politics. Publishers need to package the core items in those important and unique data sets into normalized and usable XML databases. Hardware products. Software products. Cars. Businesses. Legislation. Then attach the related content you have to that database.

For

example, include the links to the reviews of the iPod Nano and links to

the news stories and blog posts that mention the iPod Nano with the

database entry containing all the specs for that product. The

specs are the core data. The URLs are related data. The

product name is the key for that entry in the database. You once

thought the feature story or the comprehensive review was your most

important content item. No longer. The name of the object

you're covering is what you need to build this database around.

When you have your data organized, post it publicly. Post it as XML. Let people take it. Let everyone take it...even some competitors. And use the Creative Commons Noncommercial Attribution ShareAlike license to protect yourself. Each item will contain some core data that, yes, people will post on their web sites, and attached with each item will be a bunch of URLs pointing to your domain that, yes, people will also include on their web sites. You will be able to maintain the page view model for a few more years by transitioning to Web 2.0 this way.

BBC has been making some progress in this direction with their Backstage effort. It's amazing to see how many variations republishers come up with for using the longitude and latitude data that is carried with the items in the BBC XML feeds. Follow their lead.

Publishers think of their readers and their advertisers as 2 different customer sets. There's a new one you need to serve - republishers. People who use your content and republish it (tools providers, bloggers, bigger publishers, etc.) may become useful sources of revenue. I see two obvious revenue models:

- Package your ads with your content. Advertisers can reach wider audiences, probably highly engaged readers. If you're short on page view inventory, then this is a great way to open up more inventory to drop in more ad impressions.

- Share revenue earned from use of your content. If you are making money from the ads then kick some of that back to your republishers. If they are making money from having your content, then have them kick some cash back to you.

Everyone wins here. Your content gets out there further along with your brand. Your readers are getting your content how they want it. You win new readers you couldn't reach before. Your advertisers are able to serve more ads to more targeted readers. Republishers are incentivized to work with you because you are helping them make money. You might even be squeezing out your competition as your content becomes more useful than theirs.

There are countless examples of businesses that

have been outdone by competitors with less impressive products but who

found better means of distribution or established better relationships

with customers. Think of Web 2.0 as a way to reach new customers,

build new distribution channels, and find new revenue streams.

It's not scary. It's fun.

My Feedburner stats: Rojo appears out of nowhere

I saw my subscriptions increase a bit the last week or so, and it looks like the culprit is Rojo (along with some prominant referral links from a few of the big guys) which didn't appear in my reports at all. I'm guessing the reader market share changes have to do with the recent Feedburner redesign and that maybe that effort included some adjustments to their tracking system. It looks like My Yahoo! is on the rise, and it's possible that the Rojo usage is eating into the Bloglines usage. I haven't looked at Rojo in a while, so it's probably time to have another try with it.

One-click RSS subscription

Pete Freitag solved the problem with Solosub. You simply enter the URL of your RSS feed on Solosub.com. The tool then gives you the link to publish on your blog. When users click that link, they will be invited to select which RSS reader they want your RSS feed to be sent to. The user can choose to have Solosub remember that choice, and, from then on, the user will just click on any RSS feed that uses Solosub and suddenly be subscribed to that feed via their reader of choice.

Nicely done, Pete.

Here is my feed:

Update: Micah Laaker reminded me that there is another rather elegant solution for this from Jason Brome called "quicksub". I've seen this elsewhere, and it's nice because I may want to subscribe to a certain feed with one type of reader and another feed with a different reader. There's a clutter problem here, but it's definitely better than having stacks of RSS chiclets on your blog.

Testing the Yahoo! Publishing Network

Cool.

The setup process took me less than 10 minutes. Nice. I had a few customization options, but there's not a lot you can do with a dynamic text ad unit. I placed the ad unit to the left column of my blog, though I may move it around to try and improve performance a little.

Earnings will be given to charity, but I haven't decided which one. Ideas?

ProgrammableWeb.com

The way I discovered it is kind of cool, too. I added Don Loeb's RSS feed of saved pages from MyWeb to my Bloglines reader. Don doesn't blog, but he's an excellent source of what's going on out there. Fortunately, he tracks interesting things publicly, and we all can have a view into that via RSS.

Downloading podcasts with Yahoo! Music Engine

Anyhow, then I discovered on the YME plugins site that there is a podcast plugin. Nice! This now solves all my problems. I was using iTunes to pull down podcasts, Rhapsody to locate and hear individual artists and sometimes for the custom radio. I'm not sure about killing my Rhapsody account yet, but I might be getting everything I need out of YME now.

There's also a nice slurp feature that downloads all the music files from a web page when you drag and drop the URL into the plugin. I haven't tested the transfer with a player device yet. That will be key soon, but I'm just playing stuff off my laptop these days.

Blog This

The other thing I’d like to see on the news pages would be a "Tag This" form or "Save This" button right at the top of the story. I often save things to MyWeb with the intent of reading them later, and it would be nice to be able to do this from within an article page right near the headline. The Yahoo! toolbar is very useful for this, but it would make sense for publishers to offer this function inline to their pages since not everyone is a toolbar user.

Social Media metrics - identifying important core behaviors

There will always be bandits who try to derail an online social service. And there will always be contributors whose participation may be distracting to the core mission. So then how do you find the core contributors amongst the power users in a social media tool? I’m curious how you measure this, though think there are probably 3 data points to watch:

1) identify

self-organizing groups of contributors

2) measure

individual behavior popularity

3) isolate

outliers

The self-organizing groups will form the nucleus of the service’s advisory council. Then, the most popular individual behaviors will help determine which functions to develop more deeply. Bell-curving the behaviors will identify what the contributors find annoying and what they find admirable…both are important.

When core user behavior is understood, it’s a lot easier to allocate development resources and prioritize functionality. And it becomes more obvious how to convert new core users without disrupting the community.

Slashdot is a great example. They have a karma system for awarding contributors whose participation shapes the direction of the site. If you post a lot and those posts get published, you’ll get rights to moderate other people’s posts temporarily. Your moderation skills are then rated by meta moderators. The meta moderators then find themselves steering the editorial direction of the site.

The meta moderators are the nucleus of the system, the key to leading the other contributors. They have to make decisions on which types of posts should be encouraged and which should be discouraged. Outliers are eliminated organically as the collective moderation system selects the best posts for publishing.

If the moderation system slips, however, and bad posts get through, then the core users are going to get angry and leave the system. Losing 10% of the core user base is going to hurt a lot more than losing 10% of the public passersby.

I’m also curious how change occurs in these systems. Can the core user base become too rigid for what the wider public wants from the system? What if the business wants to make a strategic change?

Social Media metrics - counting the power users

The next question that comes out of the Social Media metrics discussion has to do with the ‘power user’. This name probably doesn’t work because if you’ve built a good social media service, then your users are also your creators and even strategic leaders, perhaps more important than the inhouse staff that build your tools. Regardless, the coveted customer is the one who has enough passion or self-interest in your service to make it better with his or her contributions and perhaps pay for it or make your service valuable enough for others to pay for it.

There are 3 key metrics that help you identify who this person is. You can identify the power user when you can map the following behaviors on a curve and find the top 10 to 20% of users across all categories:

1) repeat

usage

2) volume

usage

3) quality

contributions

Repeat usage is an indicator of immediacy and timeliness. Some people may be coming just to see your home page change. Maybe there’s a buzz factor from people talking about your site for a brief period of time. They might just be looky-loos. Alone, this metric does not define a power user. But with the others, it’s an indicator of someone who needs your service or who has become obsessed with it.

Volume usage is a better indicator of the power user by itself. However, he or she might just be diving into your service deeply to fulfill a specific need at a specific time. This user might just be on a research mission. But this customer is the most-likely to convert to a power user if he or she isn’t already.

High action quality becomes the determining factor in defining your power user when combined with some mix of the other 2 behaviors. It may come in the form of conformity…someone who visits a lot, goes deep into the site, and participates in similar ways to everyone else. It may come in the form of contributions…someone who adds content to the system that others find valuable. Or it may come in the form of leadership…someone who organizes people and steers the direction of how others participate.

When you are able to identify the cross section of people

who are in the top 10 or 20% in all these categories, then you are now looking

at your core power user customer base.

The top 10 to 20% in any one of these categories who are not also parts

of the other categories are potential conversions for you, but they are not

likely going to be a long term core contributor.

If you're working on this kind of thing, please

chime in. I'm throwing ideas out to get reactions and see if

there are other ways to think about how to measure success in a social

media world.

Disclosure

Somebody once told me that it’s a good idea to invest in the companies whose products matter to you. I like that concept, though my portfolio would not be a good proof point for it.

Social Media metrics - pursuing the paid subscription model

|

Uniques |

Visits |

Pages/Visit |

Page Views |

CPM |

$/Mo |

$/Yr |

$/Unique |

|

1,000,000 |

3 |

3 |

9,000,000 |

$ 20 |

$ 180,000 |

$ 2,160,000 |

$ 2.16 |

|

1,000,000 |

5 |

5 |

25,000,000 |

$ 40 |

$ 1,000,000 |

$ 12,000,000 |

$ 12.00 |

These are the metrics that drive the page view model. At 1M unique visitors per month, a media site

can drive revenue up to over $10M by focusing on a few dials:

1) Get people to return more often

2) Encourage them to visit more pages each time they come to

your site

3) Offer higher value advertising

But each user is only worth about $10/year to you in that model, in the best case scenario, so you’ll always be dependent on advertising to keep the business afloat.

There’s another set of revenue streams that start to evolve out of social media which can enhance the page view opportunity and perhaps open a more powerful business model.

|

Uniques |

% Registered |

Registered |

% Power Users |

Power Users |

Sub Price |

$/Mo |

$/Yr |

|

1,000,000 |

25% |

250,000 |

50% |

125,000 |

$ 5.00 |

$ 625,000 |

$ 7,500,000 |

|

1,000,000 |

50% |

500,000 |

50% |

250,000 |

$ 5.00 |

$ 1,250,000 |

$15,000,000 |

The paid subscription model has been the carrot at the end

of the stick for traditional media since the web began. So, I won’t proclaim to have an answer

suddenly, but I will propose that the right kind of social media service might

be able to drive that model successfully.

If you can get people to register and then build a base of power

users

who are willing to pay, then you get the benefit of reliable recurring

revenue. The service will get stronger with more users and then

incentivize them to participate even more. The service will sell

itself, and you'll be able to drive up the percentage of lightweight

users who will be easy to upsell into the paid service.

Yet again, a lot can be learned from the Netflix example. In this case the deliverable, a DVD, has a high value to me…well, that value was about $4/item when I used the local rental shop which was probably about 2x per week or $36 per month minimum. I’m now getting probably double the number films and spending half what I used to spend.

But the best part is that I’m getting highly relevant recommendations. I really like my local rental shop, and the staff there gives great recommendations. But the precision of my recommendations and the depth of my choices on Netflix are far greater than what my local shop offers. Further, I can browse both more broadly and again more deeply with a simple click or two.

My $18/month pays for better quality choices. What’s more amazing is that I’m happy to wait 2 days to receive these items.

Could that be the key? Better quality? Is it that simple? Social media is interesting, in part, because we can now use each other to find higher quality stuff in today’s information flood. That has a dollar value.

The lesson of Consumer Reports isn’t about easy access or even great user interface. They don’t employ any social media aspects to their business, but they made a bet on quality. That bet turned into nearly 2 million paid subscribers, recurring annual revenue without a single advertiser.

Your site’s ability to drive subscription revenue is

fundamentally based on quality, whether that quality comes in the form of

important content or important services.

Deliver something that really matters to your users, and the ones that

care will pay for it. Social media tools might just be the way to give your site the edge that helps you turn that corner.

Googlator

An engineer here reminded me that Google Desktop wasn't the first service

to track your online behavior and offer relevant things to click on.

The Internet: Good and Evil?

I’m listening to a beautifully depressing track called ”Activated” from the band Film School that I heard on the Notes from the Underground podcast (thanks, Mitch). Sort of like the Doves with a touch of Elliot Smith. I’m reminded of some of the darker things that sit in the back of my mind…

Do we understand the inevitable negative repercussions of this fantastic investment so many people are making into building the Internet experience? There was once a time when slavery, cigarettes and petroleum-based fuels seemed like good ideas to a large segment of the population. Even fundamentally good ideas like democracy create dangerous exhaust at some level. Do we have any clue what the damage is that the Internet is likely to bring to humanity at some point? What is the reaction to our actions?

My rose-colored lenses are amazed by the power of the Internet and the positive influence it has already had on the world in these early days of its growth. I’m boggled by the possibilities yet to be discovered because of it. But intuitively I’m sure there’s something equally negative that has yet to be found or developed…or perhaps it already has, and I’m just blind to it.

Digg

Digg Comment

Comment